Category Archives for Open Data

New Resource for Learning Choroplethr

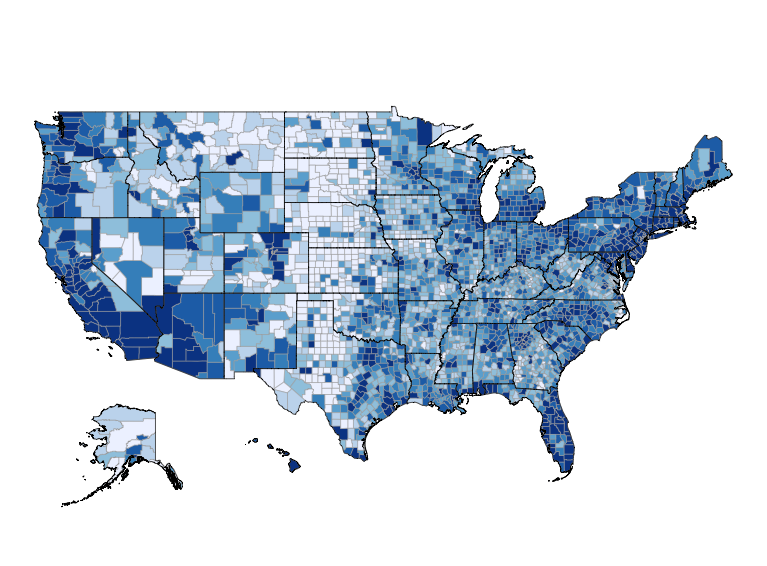

Last week I had the honor of giving a 1 hour talk about Choroplethr at a private company. When this company reached out to me about speaking there, I originally planned to give the same talk I gave at CDC two years ago. But as I reviewed the CDC talk, I realized two things: My […]

Continue readingBug when Creating Reference Maps with Choroplethr

Last week the Census Bureau published a free course I created on using Choroplethr to map US Census Data. Unfortunately, a few people have reported problems when following one of the examples in the course. This post describes that issues and provides instructions for working around it. Where does the bug occur? The bug occurs […]

Continue readingNew Free R Course on Census.gov!

Today I am happy to announce that a new free course on Choroplethr – Mapping Census Bureau Data in R with Choroplethr – is available on Census Academy, the US Census Bureau’s new training platform! (Choroplethr is a suite of R packages I created for mapping demographic statistics).This course is a more in-depth version of Learn […]

Continue reading“A Guide to Working With Census Data in R” is now Complete!

Two weeks ago I mentioned that I was clearing my calendar until I finished writing A Guide to Working with Census Data in R. Today I’m happy to announce that the Guide is complete! I even took out a special domain for the project: RCensusGuide.info. The Guide is designed to address two problems that people who […]

Continue readingUpdate on R Consortium Census Guide

As I mentioned in July, my proposal to the R Consortium to create a Guide to Working with Census Data in R was accepted. Today I’d like to share an update on the project. The proposal breaks the creation of the guide into four milestones. So far Logan Powell and I have completed two of those milestones: […]

Continue reading