Thoughts on Becoming a “Certified Cloud Practitioner”

Last month I passed the Amazon Web Services (AWS) Certified Cloud Practitioner (CCP) exam. I decided to write up my experience with the exam because it might help others who are looking to learn more about cloud computing. One of the hardest things in tech is the pace at which technology changes. All new technologies […]

Continue readingGetting Started with Python (Again): OOP and VS Code

Last month I set a goal of dusting off my Python. While I use R exclusively at work, some recent developments in Python caught my eye, and so I thought it would be good to regain my proficiency with the language. Here are some resources that have helped me on this journey – hopefully they […]

Continue readingRequest for Resources: Teaching Computer Science Basics to R Programmers

One of the most enjoyable parts of my job is teaching R to our incoming analysts. These analysts are largely recent college graduates with a limited background in computer science and statistics. Historically my job has been to teach them the basics of the Tidyverse over 3 half-days. I’m writing today to see if anyone […]

Continue readingNew package on CRAN: zctaCrosswalk

I am happy to announce that my new R package, zctaCrosswalk, is now on CRAN. This package contains the US Census Bureau’s 2020 ZCTA to County Relationship File, as well as convenience functions to translate between States, Counties and ZIP Code Tabulation Areas (ZCTAs). You can install the package like this: install.packages(“zctaCrosswalk”) This package is […]

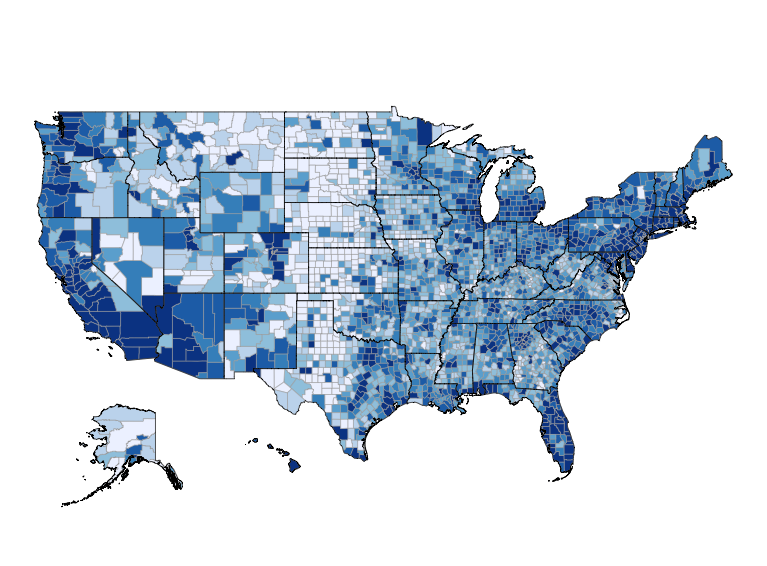

Continue readingchoroplethr 3.7.1 is now on CRAN

When I took my first software engineering job at Electronic Arts 20 years ago someone told me “More time is spent maintaining old software than writing new software.” Since my project at the time (“Spore”) was brand new, and I was writing brand new code for it, I found that hard to believe. My experience […]

Continue reading